Brain-inspired AI

GPT-3 is creating the next boom in technology but most technologists laugh it off saying that NLP today is chasing the wrong goals. So, what exactly are we aiming to achieve?

Simply said, GPT-3 is a language learning model that scans through large corpora of texts to learn patterns. This comes under the wide umbrella of Natural Language Processing. To get a better understanding let’s dig into some processes going on underneath.

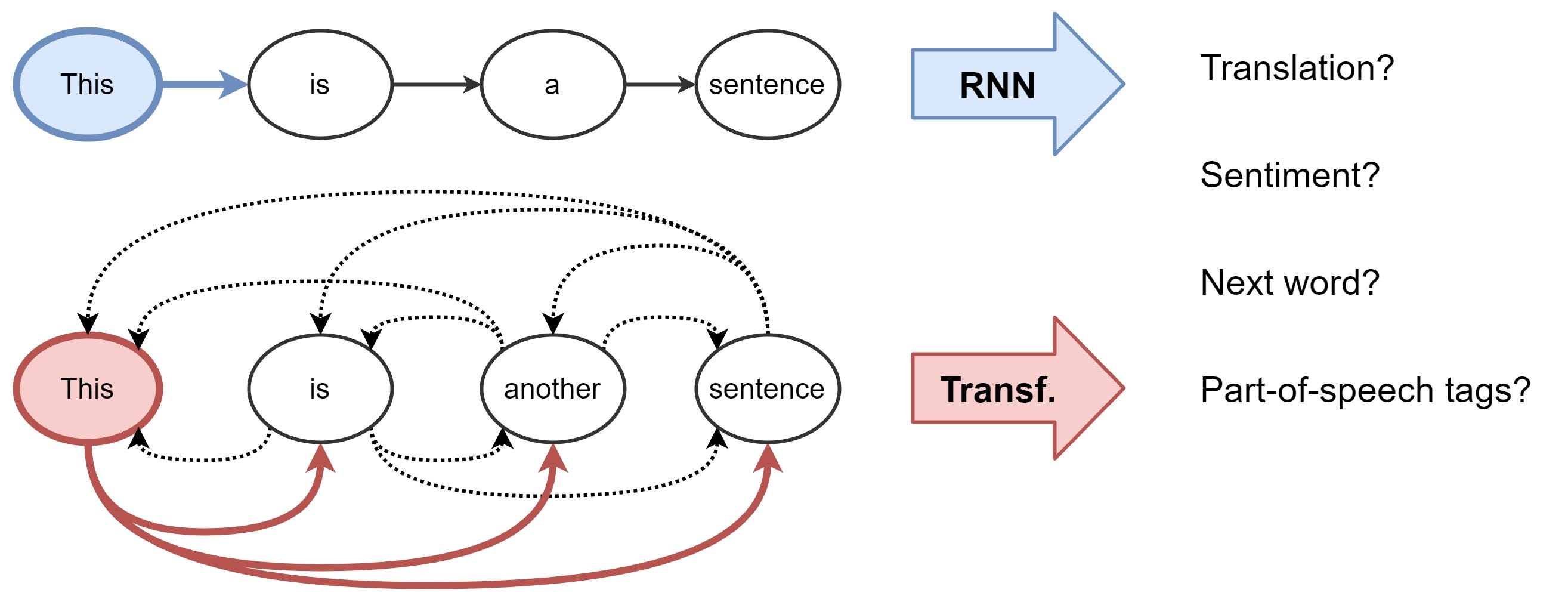

If you look at the picture above you see a sentence being fed into a system and connections being formed between them. The algorithm is trying to understand the meaning of each word by the context it appears in. Now imagine running this on every sentence ever written on Wikipedia. The question is, is that human?

OpenAI recognized the shortcomings of such a learning model. Firstly, if you require such a large dataset then this would lessen the applicability of language models. Secondly, the model could start exploiting false relationships in small datasets given its expressiveness. And lastly, humans don’t require large supervised datasets while learning.

That’s when GPT-3 introduces meta-learning: this would mean that the pattern recognition skill gained after training would be used to rapidly adapt to the text fed into it at runtime. The outcome was that this system needed fewer inputs to predict what would come next. Its performance is still inferior to fine-tuning models like Bert but the fact that it needs lesser data to train on creates all the smoke.

Even then, Elon Musk remains unimpressed. Let’s back it down a bit and define the goals of technology today.

The slowest computers from the 1960s can do everything a machine today does, the only difference is speed. Just crunching 0s and 1s to add or compare strings. But we need to understand is, those aren’t the basic principles of the human brain. Well, but why would we change the foundation of computing for machines to act more like humans so as to imitate them? For the most part, you have to agree with Feynman. Why change the way machines fundamentally are, because the way they are currently is far more efficient compared to the cumbersome and erroneous methods humans use. What we need to create is a new sense of mindful consciousness.

And so, there’s a new race in technology, one where thousands upon thousands are competing to create that mindfulness in computer chips. The idea is simple, we need an algorithm that thinks and it’s going to be a spitting image of a very famous quote: “I think, therefore I am”.

When I think about cognition, NLP is just one of the points in an interdependent food web. To create such a system, I don’t think we’re just looking at NLP alone. We need to integrate all fields of cognitive sciences. We no longer want a robot that can sit, talk, or cut tape efficiently. We want something aware of its existence, conscious of its doings, that can reciprocate altruism, and empathize with others. The kind of intelligence we’re going for no longer going to be artificial. When we translate NLP in such terms, you want your AI to speak not because it is taught to answer back but because it needs to learn about its environment.

To tackle such a problem we need to start at the roots. How does the brain gather, analyze, store, archive, retrieve, and synthesize data? These are the questions that lead us to reimagine computer architecture. We no longer want streams of binary but instead intricate firings of synapses and that is about 20,000,000,000,000,000 bits of info transmitted per second. And this means we need a radical change in the very fundamentals of computing. Stacks, queues, and memory addressing could potentially start to become meaningless in such systems. Instead, new models of learning need to be introduced, data structures replaced with complex systems, and pipelining needs to be made biologically plausible.

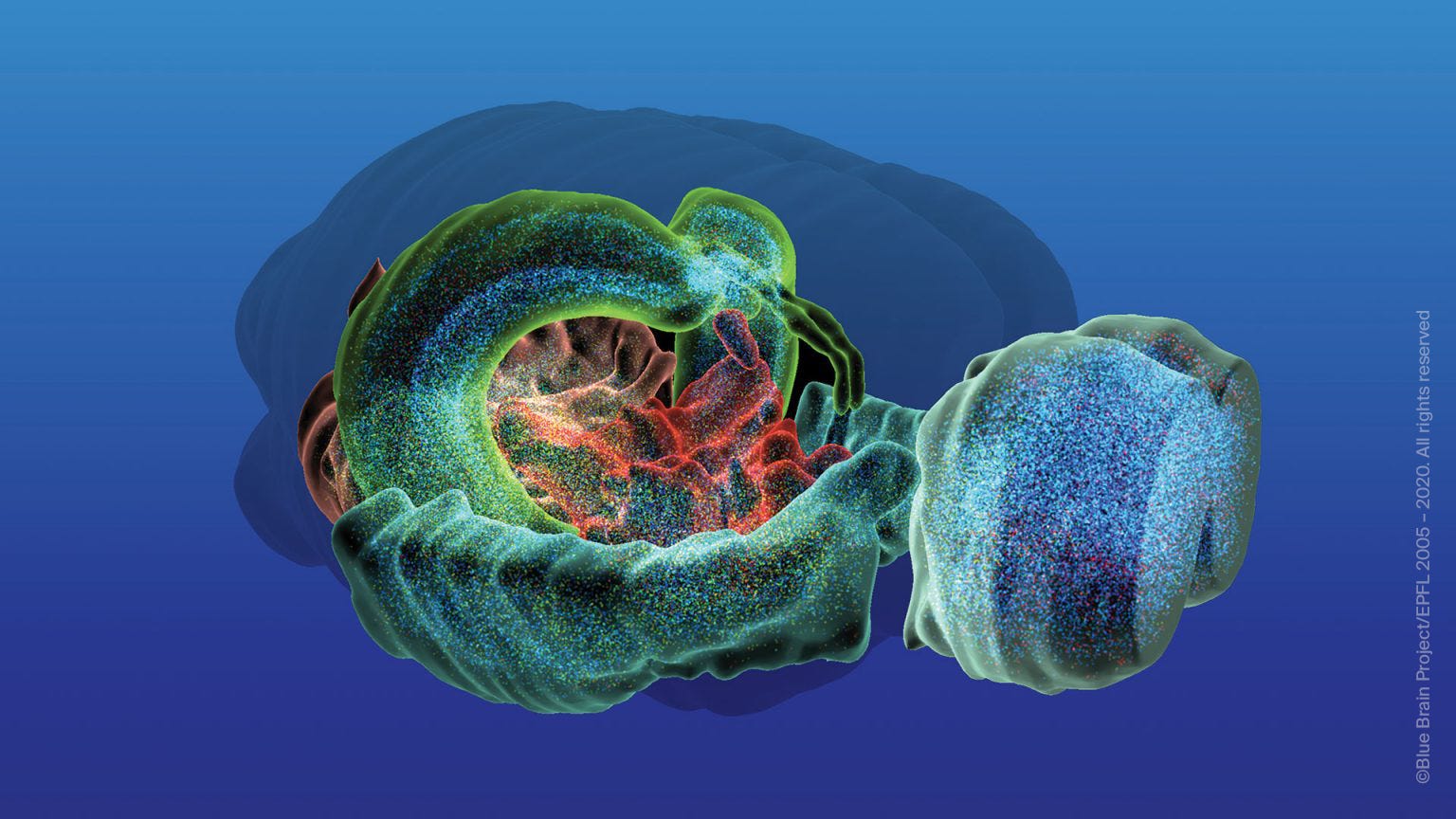

The Blue Brain Project creates a detailed simulation of the mouse brain.

The Blue Brain Project creates a detailed simulation of the mouse brain.

This field is that deals with designing brain-like models is called neuromorphics. The goal of this field lies in simulating a brain-like architecture that trains on fewer samples to get to the output, apes the plasticity in human brains by changing the circuitry as a function of experience, and not only that this form of computing literally needs to be powered by hamburgers.

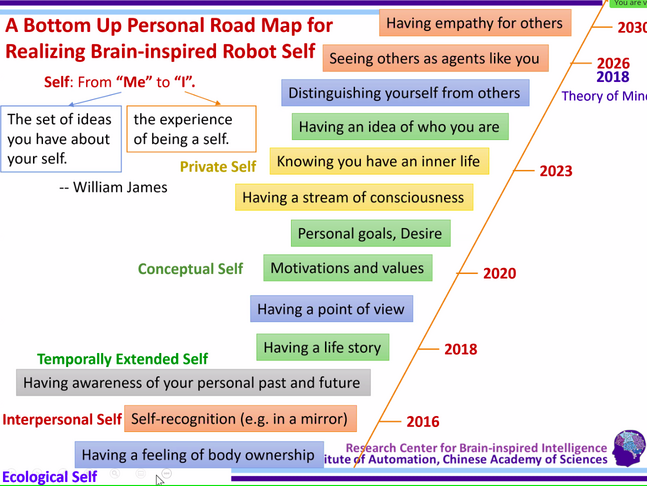

And these are still the easy parts because up until now we were dealing with tangible things1+. Below I’ve attached a road-map of the self-realizing robot.

At this stage, we’re dealing with Lacan. The idea of self, identity, dualism, and qualia. Things that are complicated to the point where a student of science such as I, can have a full-fledged existential crisis just reading about such things and not understanding wholly.

And this is exactly where AI is headed towards. Fasten your seatbelt, this one’s gonna be a bummmpy ride.